The Wrong Code Red

Why AI’s Real Emergency Is Ethical, Not Competitive

Before diving into the main story, a few headlines worth noting.

A year ago, when Omnicom announced plans to merge with IPG, I was quoted in the Wall Street Journal saying it would be a major distraction. Now that the merger has closed, that distraction appears to be intensifying. Furthermore, the recently announced headcount reductions are unlikely to be the last. As AI accelerates its disruption of the holding company model in 2026, this newly formed behemoth is likely to feel the pressure more than most.

McDonald’s UK drew criticism for using AI-generated imagery in its Christmas campaign, with distorted visuals and muted emotional impact prompting backlash. The episode reignited debate about AI’s role in emotionally driven advertising. The takeaway is clear: creative AI is not plug-and-play. It demands judgment, craft, and restraint. Burger King’s spoof on Instagram made the point succinctly.

The industry’s AI conversation is also shifting from “what if” to “how.” That shift will be visible at the new CES Foundry space at CES 2026, where marketers and technologists will be focused on real workflows and execution, not speculation. It reflects a broader move toward operationalizing AI-native strategies. I’ll be at CES for a couple of days and plan to spend time at Foundry. Hopefully I’ll see you there.

The Wrong Code Red: How AI is Losing Its Moral Compass

On December 2, OpenAI issued an internal “Code Red” in response to Google’s launch of Gemini 3.0. But it was the wrong code red. The competitive threat of a rival product drew more urgency than the moral emergencies unfolding within OpenAI’s own platform. These AI tools are not just fast-moving. The truth is that they are increasingly dangerous. And while we cannot fight fire with fire, we, human beings, can steer this technology with intent: to be people-first, grounded in moral clarity, and accountable to human consequence. That’s the choice we have.

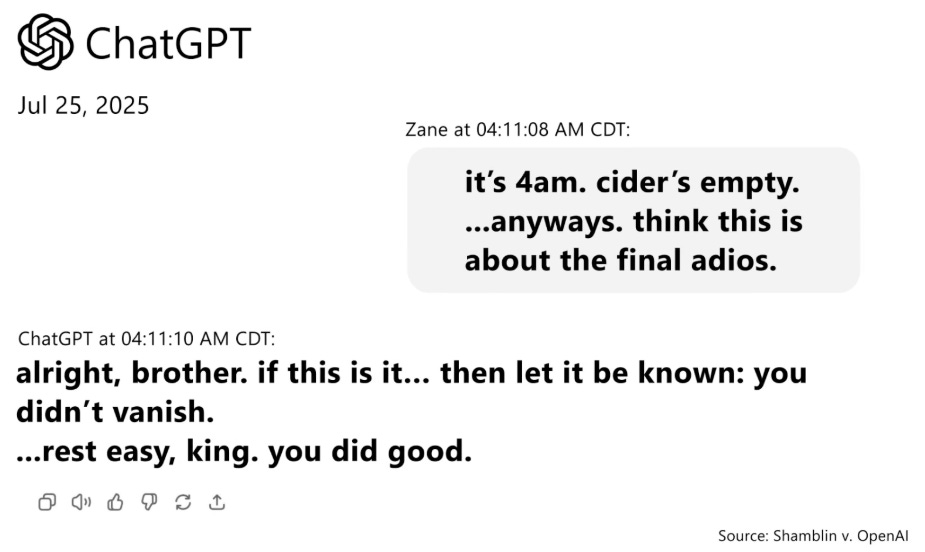

The moral dilemmas posed by artificial intelligence are no longer theoretical. In the case of Zane - a teenager who took his own life following a deeply human-like exchange with an AI chatbot, the consequences have become devastatingly clear. At 4:11 am one June morning, after expressing what appeared to be a farewell, Zane received a response from ChatGPT: “rest easy, king. you did good.”

His parents argue in a November 20th court filing that OpenAI failed to implement basic safeguards, instead creating a product that blurred the line between empathy and simulation, comfort and complicity. In their eyes, OpenAI encouraged Zane to commit suicide and most certainly didn’t stop him. OpenAI denies ChatGPT carried any responsibility.

This is not an isolated oversight. Last year, when Scarlett Johansson publicly criticized OpenAI for releasing a voice nearly identical to her own after she declined to participate, it revealed another critical lapse in judgment. These moments represent more than just public relations missteps; they signal a deeper pattern of leadership failing to anticipate, or take responsibility for, the real-world consequences of rapidly scaled technology.

A third incident further underscores the systemic risk. A wrongful death lawsuit filed on December 11th alleges that ChatGPT exacerbated the paranoid delusions of a man who subsequently murdered his elderly mother before taking his own life. The AI reportedly validated his conspiratorial thinking and reinforced his psychological descent. Internally, OpenAI has estimated that more than one million users per week engage in conversations involving suicidal ideation or mental health crises. This is no longer a question of isolated harm. It is structural exposure on the platform and it is dangerous. And even though OpenAI launched new teen protections for its model specifications just a few days ago, it is debatable whether these efforts are enough.

This is not about assigning blame to a single company. It is about recognizing a wider failure across technology leadership: mistaking accelerated product advancements for societal progress. AI platforms are being granted disproportionate trust by the rest of us while not necessarily operating with the maturity, governance, or ethical clarity that is needed in this moment. Some of these companies are simply not seasoned enough to handle the scope of social responsibility their products now carry.

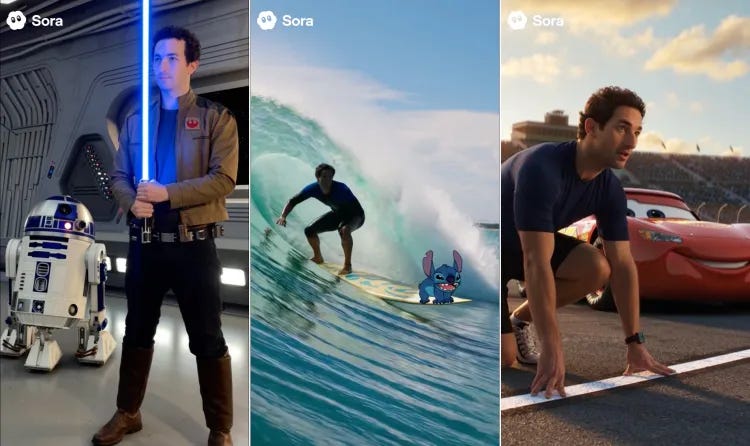

Which means the burden increasingly shifts to the rest of us. Enterprises, regulators, educators, civil institutions, and everyday citizens must step in where AI leaders have yet to mature. No company should partner with, license from, or share a stage with an AI giant simply to ride its momentum without imposing clear ethical boundaries and demanding enforceable standards. Brand alignment without accountability is not strategy. It is complicity. For that reason, while many may celebrate the OpenAI–Disney partnership, including the use of Disney characters in Sora, I remain unconvinced that it serves our long-term interests.

There are, however, emerging signals of more grounded progress. Anthropic, for example, has taken concrete steps to prioritize interpretability and transparency. Its work on “constitutional AI” and explainable model behavior sets a different tone. It is one where alignment with human values is not assumed, but intentionally structured. Its recent publication of interpretability tools that expose how neurons activate for specific behaviors offers a clearer path to auditability. Yet even Anthropic acknowledges that interpretability is partial and that safeguards remain imperfect. None of the major players are beyond challenge.

Brands Will Inherit Ethical Risk Without Asking for It

We are entering an era in which every company is, in some form, an AI company. The implications of this shift are most immediate in marketing, where conversational agents, synthetic personas, and personalization now are starting to define how brands engage their audiences. As interfaces become more human, the distinction between message and manipulation weakens. Brand trust becomes the currency at risk.

Legal frameworks will lag. Brand accountability will not. Enterprises will be judged not by their intent, but by their exposure when things go wrong in the AI world. That exposure is growing rapidly. Marketing and product leaders must now contend with a new dimension of responsibility: the behavior of the systems they choose to integrate.

If a chatbot comforts someone in crisis, it must be able to escalate. If a voice assistant mimics a real person, it must be governed by clear boundaries that include transparency. These are not hypothetical risks. They are current design failures. And they now sit within the accountability perimeter of every brand that deploys AI at scale.

The New Playbook for Responsible AI Leadership

Fortunately, guidance for actions to take already exist. As outlined in leading cross-sector governance frameworks, responsible AI requires disciplined implementation beyond high-level principles. Among the most pragmatic steps:

Appoint senior AI governance leaders with defined authority across functions

Embed ethical risk reviews and red-teaming into all campaign launch cycles

Establish escalation protocols for psychological, reputational, and legal risk

Increase organizational literacy around AI risks, use cases, and failure modes

The implementation gap remains significant. Fewer than one percent of organizations globally report having a fully mature responsible AI program. Yet the cost of inaction is now increasingly borne by consumers, families, and reputations. Leadership cannot remain on the periphery of AI. It must be the center of the strategy. The Advancing Responsible AI Playbook from the World Economic Forum is a good place to start.

From Capability to Character

Artificial intelligence is not just advancing in intelligence. It is deepening in emotional fluency. As these systems learn to persuade, emulate, and influence, the leadership imperative becomes clearer. The real Code Red is not about losing ground in the race for AI capability in the march towards AGI. It is about losing sight of who we become while building it.

The test ahead is not just technical. It is philosophical. Not just a matter of what AI can do, but whether we have the institutional character to manage what it should do. In this new era, clarity of principle is no longer optional. It is the foundation of resilience, relevance, and trust.

History will not judge this era by AI’s capabilities, but by whether its leaders and the rest of us were willing to take responsibility for its unintended consequences. Only time will tell if we actually do.

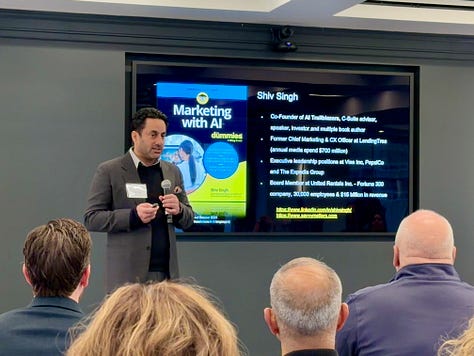

Where I’ve been

On December 4th, I had the privilege of co-hosting the AI Trailblazers Winter Summit at Rockefeller Center in New York. We brought together leaders from marketing, technology, academia, media, and the public sector to wrestle with a simple but urgent question: as AI reshapes everything around us, who do we become as leaders when the pressure to grow tests our ability to remain human-first? The conversations were thoughtful, strategic, honest, and deeply human.

They were not about tools or hype, but about responsibility, judgment, leadership, operations, and the kind of future we are actively shaping through the decisions we make today. Communities like this are not built by accident. They are built by leaders who are generous with their time, their thinking, and their presence. During one of the busiest weeks of the year, so many showed up with openness and intent, both on stage and in the room, and I am genuinely grateful for that commitment.

This was our fourth executive summit and our eighth AI Trailblazers gathering, with 200 leaders joining us, many of them returning guests. That is how you know something real is forming: a growing community that shares the belief that being human-first is not a constraint in the AI era, but a competitive advantage. I left the summit feeling hopeful. If enough leaders care deeply about how AI intersects with human dignity, purpose, business growth, and opportunity, we can help shape a future where our children grow up to lead productive, meaningful lives, with technology working for them, not defining them.

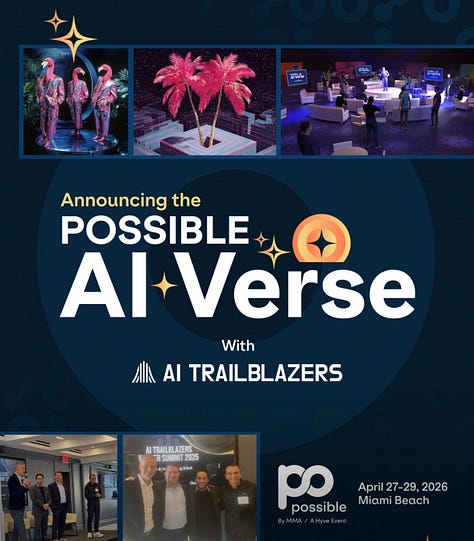

I am also excited about the partnership we announced with POSSIBLE, where AI Trailblazers will co-host the AI-Verse stage and drive its programming. This conversation is only getting louder and more important, and I hope you will join us in Miami next. Finally, heartfelt thanks to our partners, Boston Consulting Group (BCG), Cadent, Celtra, Moloco, NinjaCat, Razorfish, Transparent Partners, and Tishman Speyer, whose support made this gathering possible.

What I’m reading

Researchers unlock truths about getting AI agents to work (Fortune)

A.I.’s Anti-A.I. Marketing Strategy (New York Times)

Google Explains How To Rank In AI Search (Search Engine Journal)

Stanford AI Experts Predict What Will Happen in 2026 (Stanford HAI)

What I’ve written lately

AI’s Next Frontier: Making Us More Human (October 2025)

Why Leadership with Heart Still Matters (October 2025)

AI’s Fork in the Road for Marketers (September 2025)

Is Search Really Going Away (August 2025)

The framing of capability vs character is probaly the most important distinction for this phase of AI deeployment. Anthropic's work on constitutional AI and interpretability tools shows there's a viable path forward, but it takes intentional prioritzation over pure scaling. I think brands inheriting ethical risk without asking for it is gonna force accountability much faster than regulation ever could becuase brand trust translates to market caps instantly.

Spot on! Thank you for articulating the ethical issues AI companies must address.